Introducing the context of the study

The non-discrimination right is one of the fundamental values of the European Union (EU) – take, for example, Articles 2 and 10 of the Treaty on European Union (TEU) or Article 21 of the Charter on Fundamental Rights of the EU. It comes as no surprise that digital ecosystems are also subject to the laws set out in the above documents. Particularly important is the possible incidence of discriminatory practices in content moderation on online social media platforms – an area that seems largely under-investigated. The matter becomes even more critical within the context of the Digital Services Act (DSA) proposal which sets rules to address the negative effects on the fundamental right to non-discrimination, for example, as regards the content moderation systems used by large online social media platforms.

There are several challenges to conducting such an investigation. First, as algorithmic systems are socio-technical systems, the origin of discrimination becomes difficult to detect. Second, there is little pre-existing evidence on which to base the investigation. To be more specific, while the literature on the discriminatory effects of content moderation on online platforms is relatively well-documented in the United States, the equivalent is lacking in the European context. Therefore, the European Commission’s interest in gathering information on the topic with the DSA in mind led to the project The Observatory on the Online Platform Economy which monitors the evolution of the online platform economy to advise and support the Commission in its policy-making in relation to online platforms.

As a part of this project, eight different but interconnected workstreams were formed, one of which focuses on Algorithmic Discrimination, led by an Expert Group and supported by Visionary Analytics. It is precisely in the context of this workstream that Visionary Analytics performed a literature review and a case study analysis on the topic of algorithmic discrimination and content moderation on large online social media platforms.

Below we present some interesting findings from our research, bringing to the forefront state-of-the-art findings in the field of fairness and accountability in machine learning and other technologies; as well as examples illustrating discrimination situations resulting from algorithm-driven content moderation on online social media platforms.

Defining algorithmic discrimination: a new conceptualisation

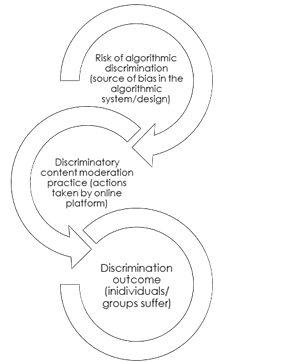

In an emerging field such as machine learning, semantic diversity often occurs. It comes as no surprise then that there is no clear-cut definition of algorithmic discrimination in literature. However, there are notions of ‘bias’ and ‘fairness’ that help structure the discourse on algorithmic discrimination. Coupled with this, our study extends discrimination to include specific unfavourable outcomes not covered by the law, i.e., hate speech and freedom of expression, and develops a new construction of ‘algorithmic discrimination’ and the process through which it may occur:

- A risk factor for discrimination, i.e., the source of bias in the algorithmic system and/or design, forms.

- A content moderation practice is applied by the online social media platform which is driven by the potential underlying sources of biases, as described in the first step.

- The consequence of the bias factor in the algorithms occurs which leads to a discriminatory outcome.

Step 1: How and where do risk factors of algorithmic discrimination occur?

Step 1 of algorithmic discrimination, as described above, is the formation of a risk factor. But how and where do these risk factors occur? We find that bias may occur at three different levels:

- The values, rules, and standards set forth by online platforms may contain risk factors leading to algorithmic discrimination, such as the difficulty to translate legal principles to context-specific situations and biased enforcement of contractual obligations

- Legal incentives for over-moderation, such as legal ambiguity, private ordering, and the territoriality of law, among others

- Technical-level characteristics can increase the risk of discrimination through personal and cultural biases of researchers working on training data, redundant encodings, employment of ‘bad labour’, and others.

Step 2: What are the content moderation actions online social media platforms take that may lead to discriminatory outcomes?

Following the new conceptualisation of algorithmic discrimination, Step 2 is the application of a moderation practice by a platform. In this sense, it is useful to also understand what content moderation means. However, the language of content moderation is continuously evolving with the advent of new systems, methods, and frameworks for policing online communities.

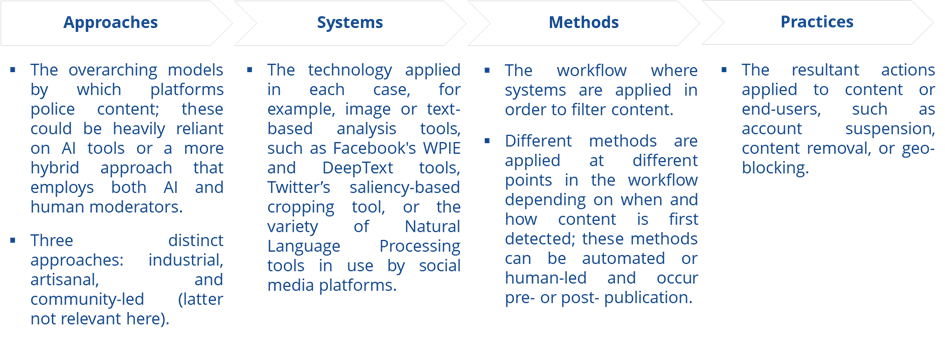

To navigate a typology still in its infancy and to provide a more thorough breakdown of algorithmic content moderation, we also defined and distinguished the following key concepts: content moderation approaches, content moderation systems, content moderation methods, and content moderation practices. These are presented in the figure below for your convenience:

The above typology shows the classification of content moderation actions that may, in various ways, contribute to an increased risk of discrimination when driven by the potential underlying sources of biases, as described in the first step.

Step 3: What are examples of discriminatory outcomes and how do such consequences occur?

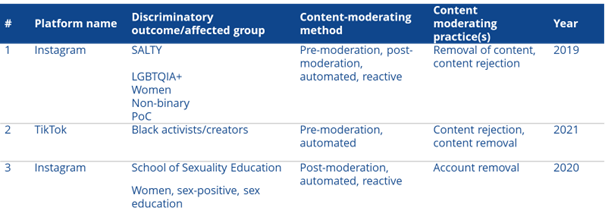

As a part of the Case Study Analysis, we examined three cases of potential algorithmic discrimination:

An overview of cases with discriminatory outcomes experienced on online social media platformsSource: Visionary Analytics, 2021

In the Case Study Analysis report, we carry out an analysis of the technologies used in each case, the methods of identification of the incident, mitigation actions, and the actors involved. Here, however, we briefly introduce each case.

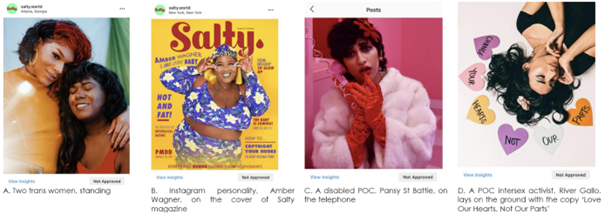

The Case of Salty

Salty, a digital publication aimed at women, transgender, and gender non-conforming and non-binary people, called out Instagram for banning six ads featuring transgender and non-binary people of colour.

According to Instagram, the ads in question promoted escorting services, though nobody featured in the ads was a sex worker.

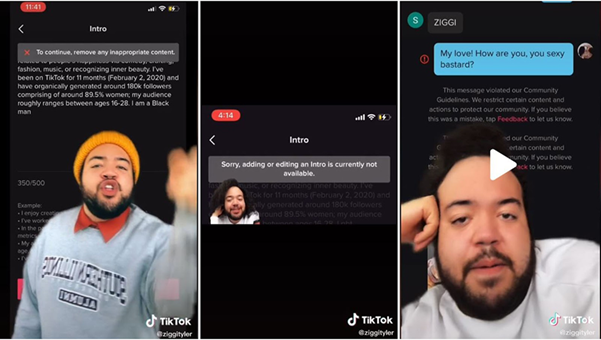

The Case of Ziggi Tyler (and black content creators)

Ziggi Tyler could not include phrases in his bio including the word ‘Black’, ‘Black Lives Matter,’ ‘black people,’ ‘black voices’ and ‘black success,’ and simply stating ‘I am a black man’ – all of which would immediately trigger a pop-up message prompting him to ‘’remove any inappropriate content.’

The Case of the School of Sexuality Education

The School of Sexuality Education Instagram account was disabled for not following ‘Community Guidelines’ because ‘sexually suggestive content isn’t allowed on Instagram’.

After failing to hear back from Instagram regarding an appeal to the decision the organisation reported through Report Harmful Content. Their account was reactivated with no further explanation.

Our contributions culminated with a presentation of the research findings at the workshop organised by the European Commission

Held on September 20th, 2022, experts from academia and the private sector discussed the nature and risks of algorithmic discrimination, mitigation measures, the way forward for European policymakers in the context of the DSA, and the risks of discriminatory outcomes due to the algorithmic systems and content moderation measures in place by large social media platforms.

During the workshop, Nini Gigani, a Researcher at Visionary Analytics, presented the conclusions of our investigation, proposing a definition of algorithmic discrimination and a typology of content moderation as a part of the literature review, coupled with three case studies of discriminatory outcomes that have occurred on large social media platforms in recent years.

Check out more about the workshop here.

To find out more, you can check out the work of the Observatory on the Platform Economy on the project website. Or simply drop us a line!